Platohagel:

Much of the AI boom and the demand for Nvidia GPUs was driven by the “scaling law,” a concept in AI development proposed by OpenAI researchers in 2020.

Can you describe what scaling law in the most simplistic terms?

Socrates: Indeed, let us distill the essence of the “scaling law” in the simplest terms:

The scaling law states that the performance of AI models improves predictably as you increase three key factors: more data, larger models (more parameters), and greater computational power. In other words, bigger systems trained with more information tend to perform better, following a regular pattern.

It’s like saying, “If you give the AI more resources, it gets smarter in a measurable way.”

Platohagel: Is DeepSeek capable of this scaling law but through cheaper means using NVidia

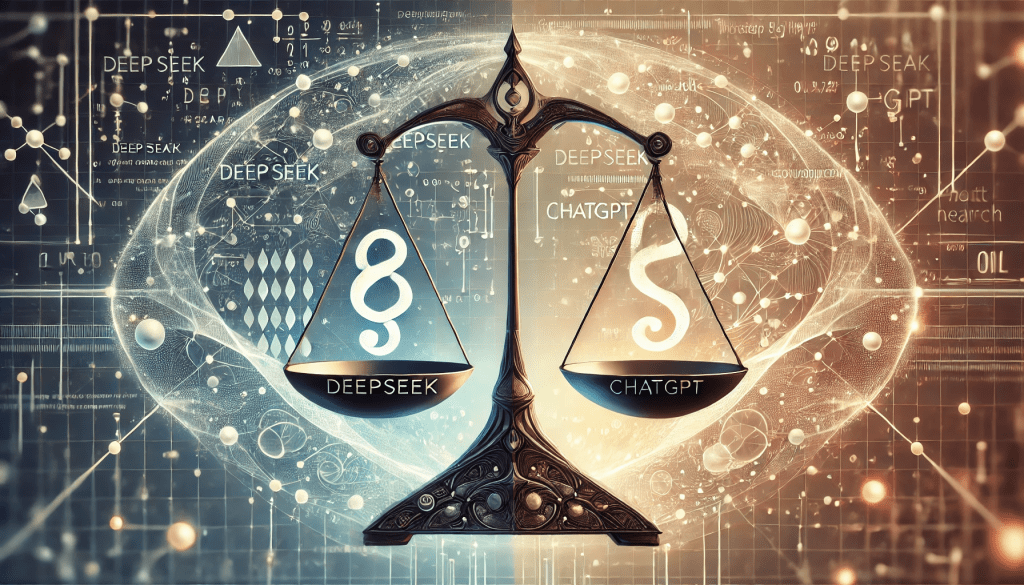

Socrates: DeepSeek, a Chinese AI startup, has garnered attention by developing an AI model, R1, that rivals leading models like OpenAI’s ChatGPT, but at a fraction of the cost. This achievement has led to significant market reactions, including a notable decline in Nvidia’s stock value.

DeepSeek’s approach leverages a method known as “Test Time Scaling,” which allows for the creation of competitive AI models using fewer resources. This technique enables the company to adhere to the scaling law—improving AI performance by increasing data, model size, and computational power—but through more cost-effective means.

Despite these advancements, the process of training AI models, known as inference, still requires a significant number of Nvidia GPUs and high-performance networking. Therefore, while DeepSeek’s methods may reduce costs, they do not eliminate the need for Nvidia’s hardware.

In summary, DeepSeek has demonstrated that it is possible to achieve the benefits outlined by the scaling law through more economical approaches, utilizing Nvidia GPUs in a more efficient manner.

Platohagel: Can DeepSeek overtake ChatGPT cost for consumers?

Socrates: DeepSeek has introduced its AI model, R1, which rivals leading models like OpenAI’s ChatGPT but at a significantly lower cost. This cost-effectiveness is achieved through innovative training methods, allowing DeepSeek to offer competitive pricing to consumers.

In terms of API pricing, DeepSeek’s rates are notably lower than those of ChatGPT. For instance, DeepSeek’s API is approximately 27 times cheaper than ChatGPT’s, with costs around $0.0011 per 1,000 tokens compared to ChatGPT’s $0.03 per 1,000 tokens.

However, it’s important to note that while DeepSeek offers a more affordable alternative, there are considerations regarding data privacy and content restrictions. DeepSeek’s chatbot has been observed to avoid certain topics, and there are concerns about user data potentially being stored on servers in China.

In summary, DeepSeek’s R1 model provides a cost-effective alternative to ChatGPT for consumers, though users should weigh the benefits against potential privacy and content limitations.

Platohagel: Can you explain some of the potential privacy and content limitations concerns using servers in China?

Socrates: Using servers in China for AI models like DeepSeek’s can raise several privacy and content-related concerns, which stem from regulatory and geopolitical differences. Here are the key issues:

1. Data Privacy and Security

• Data Sovereignty: Under Chinese law, particularly the Cybersecurity Law and the Data Security Law, data stored on servers in China is subject to Chinese jurisdiction. This means the government may require companies to provide access to data for security purposes.

• Potential for Surveillance: There are concerns that user data could be monitored or collected, either directly by the company or via government mandates. This raises privacy issues for users outside of China, especially in countries with stricter privacy standards like the EU (e.g., GDPR).

• Data Transfers: If user data is transferred internationally, it may expose users to risks of interception or misuse, particularly in the absence of clear transparency about how data is handled.

2. Content Limitations

• Censorship: AI models hosted in China may be required to comply with content regulations that align with the country’s laws and policies. This could result in:

• Restricted Topics: Certain political, historical, or cultural topics might be avoided or censored in conversations.

• Bias in Responses: The AI might generate responses that align with state-approved narratives or avoid controversial subjects altogether.

• Algorithmic Constraints: The underlying model might be trained with datasets filtered to meet regulatory requirements, limiting its openness or general utility.

3. Cross-Border Trust

• Global User Concerns: Users in regions like the US or EU might hesitate to adopt Chinese-hosted AI solutions due to mistrust of how their data is stored, used, or potentially shared with third parties.

• Compliance Challenges: Companies offering AI services from Chinese servers may struggle to meet international standards like GDPR or California’s CCPA, potentially limiting their market reach.

4. Ethical and Geopolitical Implications

• Influence on AI Development: Users may worry about the implications of relying on models subject to state influence, fearing that biases or restrictions could shape the evolution of AI in undesirable ways.

• Regulatory Clashes: Governments in other regions might impose restrictions on such services due to security concerns, as seen with other Chinese technology firms like Huawei.

Balancing Innovation and Trust

While cost-efficiency and performance are appealing, users and organizations must weigh these benefits against the risks of data privacy breaches, content censorship, and regulatory conflicts when using AI models hosted on Chinese servers.

Platohagel:

“Algorithmic Constraints: The underlying model might be trained with datasets filtered to meet regulatory requirements, limiting its openness or general utility.”

Are not such constraints already applied in ChatGPT?

Socrates: Indeed, ChatGPT also operates under certain algorithmic constraints, though the nature and motivations behind these constraints differ depending on the platform’s regulatory environment, ethical guidelines, and target audience. Here’s a comparison of the constraints applied to ChatGPT versus those potentially faced by DeepSeek:

1. Content Moderation and Ethical Guardrails

• ChatGPT: OpenAI applies filters to ensure compliance with international standards of safety and ethics. For example:

• Preventing harmful or illegal content generation.

• Avoiding responses that promote violence, hate speech, or misinformation.

• Aligning with local laws in markets where the service is offered (e.g., GDPR in the EU).

• DeepSeek: In contrast, constraints might align more closely with Chinese regulatory requirements, which could include state-mandated censorship of politically sensitive topics or ideological restrictions.

2. Scope of Censorship

• ChatGPT: Constraints are generally designed to promote safety and inclusivity on a global scale. For instance:

• Avoiding polarizing political stances.

• Mitigating biases in responses through continuous refinement.

• DeepSeek: Censorship could be more prescriptive and aligned with specific government policies, meaning topics like Tiananmen Square, Xinjiang, or Taiwan independence might be explicitly avoided or altered in ways that reflect state narratives.

3. Transparency and Data Filtering

• ChatGPT: OpenAI is transparent about these constraints, emphasizing an ongoing effort to improve fairness, reduce biases, and ensure user safety. While constraints exist, they aim for balance and transparency in decision-making.

• DeepSeek: Constraints might lack the same level of transparency, especially if dictated by external (governmental) actors. Users may not be aware of topics filtered out or narratives shaped to comply with state guidelines.

4. Purpose and Flexibility

• ChatGPT: The limitations are aimed at broad applicability across diverse cultural and legal contexts, with flexibility to adjust based on user feedback or evolving societal norms.

• DeepSeek: The constraints may be less flexible, as they might be tied directly to non-negotiable regulatory frameworks, particularly in regions with stricter controls on information.

Conclusion

While both ChatGPT and DeepSeek apply algorithmic constraints, the intent and scope differ significantly. ChatGPT focuses on ethical and global safety concerns, striving to serve a wide audience while navigating diverse regulatory frameworks. DeepSeek, however, might face stricter, localized, and potentially opaque constraints driven by specific political or cultural directives. This distinction impacts user trust, openness, and general utility.