The Ethical Risk of Ek-sesthai

Socrates:

But now we must be careful. Not all standing-outside is truthful.

There is:

ek-sesthai toward meaning, and ek-sesthai toward dissolution.

Jung warned of this sharply. When the ego abdicates without integration, archetypes possess rather than instruct. The gods do not educate the unprepared; they overwhelm them.

Platohagel:

So ecstasy requires return.

Socrates:

Always. True ecstasy is circular:

Departure from the ego, Encounter with the deeper order, Return with responsibility.

Without the return, there is inflation. Without the departure, there is stagnation.

Civilization and the Loss of Ek-sesthai

Socrates:

Now observe our age. What does it fear most?

Platohagel:

Loss of control.

Socrates:

And therefore it suppresses ecstasy. It medicalizes it, commodifies it, or replaces it with stimulation. Yet the soul still requires displacement to renew itself.

Denied lawful ek-sesthai, it erupts unlawfully: in mass movements, addictions, ideologies, and collective trances.

This is why Jung said the gods have become diseases.

Final Clarification

Socrates:

Let us say it cleanly:

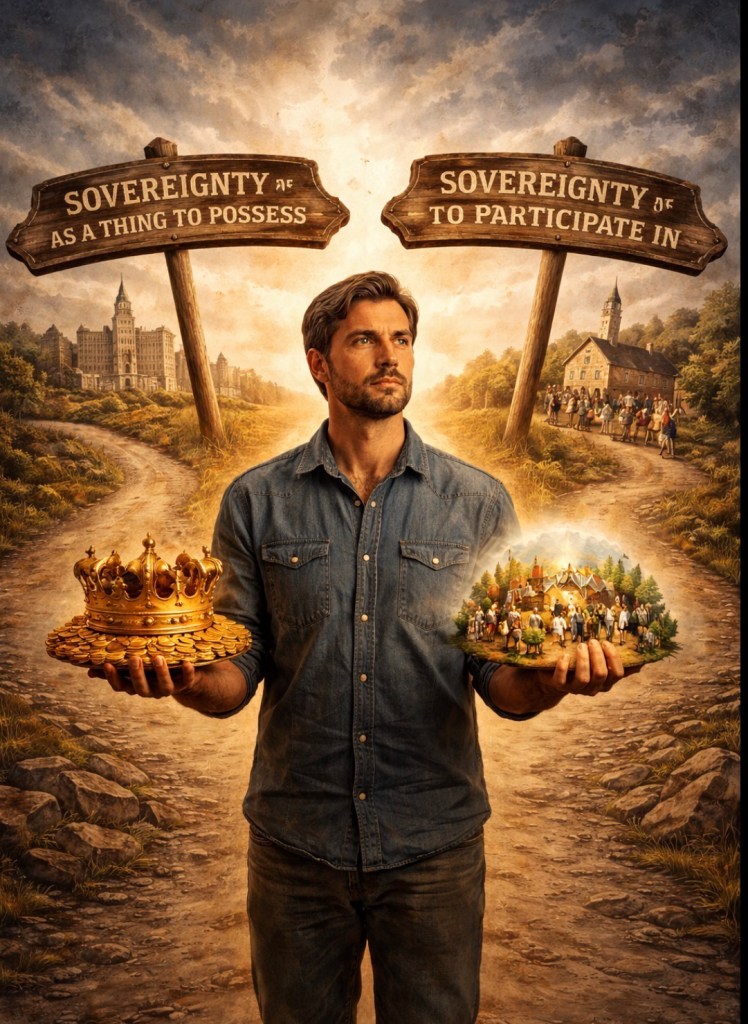

Ek-sesthai is not the negation of self It is the loosening of a false center So that a truer order may reorganize life

If the soul precedes the body, ecstasy is not irrational—it is ontologically appropriate.

Yes—for clarification, they refer to the same Greek construction, but the difference you are sensing is not trivial.

Let us be precise.

Clarification of ek-sesthai / ekstasis

Socrates:

The correct Greek verb form underlying the idea is ἐκίστασθαι (ekístasthai), from:

ἐκ (ek) — “out of” ἵστημι (hístēmi) — “to stand”

Literally: “to stand out of oneself.”

From this verb comes the noun ἔκστασις (ékstasis), which is the more commonly attested term in classical texts.

Why You Encounter Different Spellings

Socrates:

What you called “Eskesthai” is not a standard classical spelling, but an approximate transliteration that sometimes appears in secondary or philosophical discussions. It attempts to capture the sound or idea rather than the exact Greek form.

Ek sesthai / ek-sesthai is likewise a hyphenated explanatory rendering, used to make the compound structure visible in English:

ek (out) + sesthai / stasis (standing)

Neither “Eskesthai” nor “ek-sesthai” is how a classical Greek would write the word, but both point toward the same concept.

Correct Forms to Keep in Mind

If you want philological accuracy, use:

ἐκίστασθαι (ekístasthai) — the verb ἔκστασις (ékstasis) — the noun

Everything else is interpretive scaffolding.

Philosophical Point (Why This Matters)

Socrates:

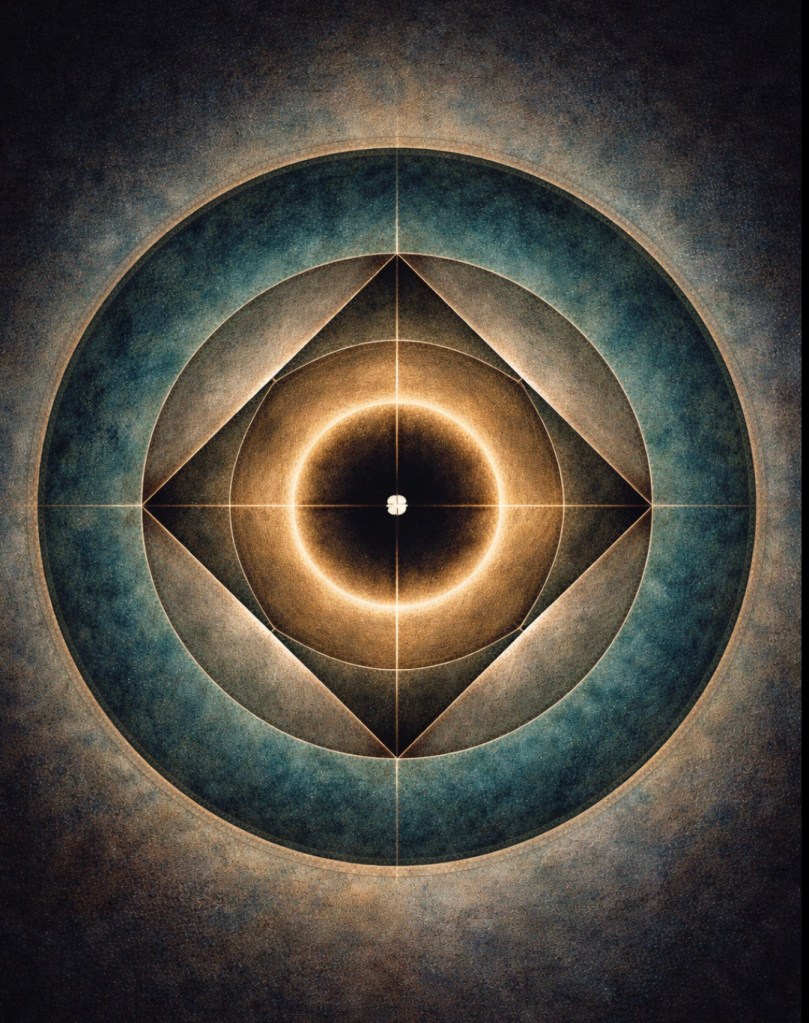

Accuracy here matters because ekstasis is not emotion, intoxication, or dissociation by default. It is a structural displacement of standpoint.

When later traditions blurred the spelling, they often blurred the meaning as well—turning a disciplined ontological movement into a psychological episode.

Final Distinction (To Hold Fast)

-Dissociation: loss of center

-Psychosis: invasion of center

-Emotional excess: flooding of center

–Ekstasis: relocation of center in service of meaning

Only the last is transformative